It’s been a long time coming.

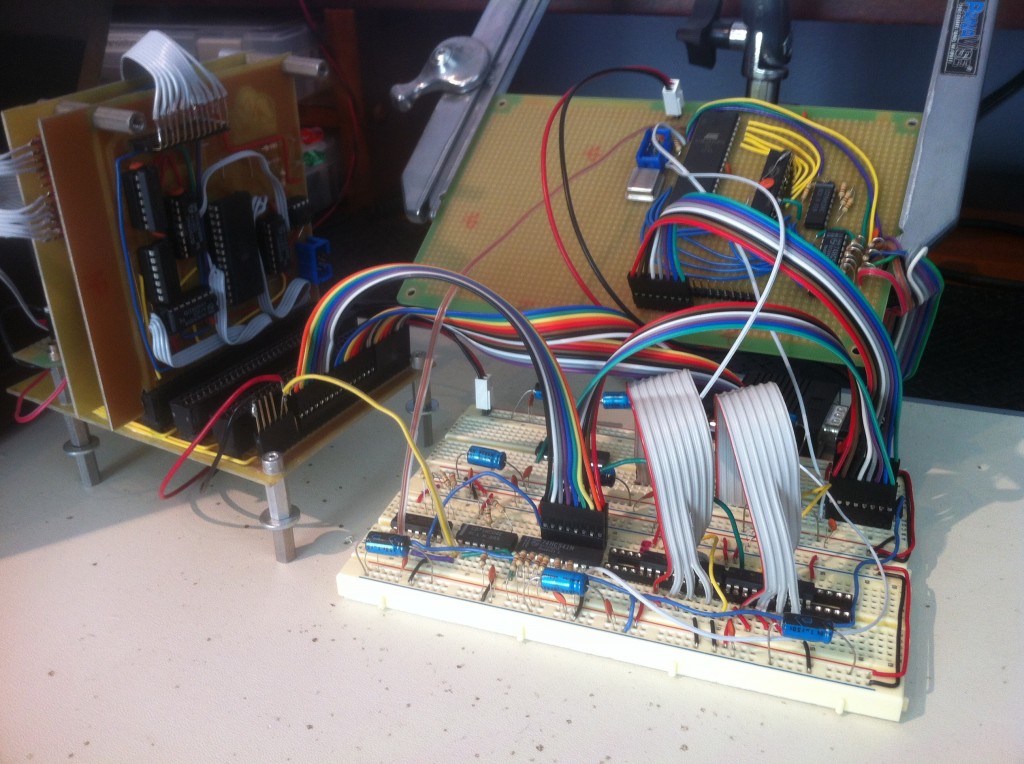

It’s been quite an odyssey getting to this point. One of the tougher challenges I’ve encountered so far on this project is setting up an interface between the 6502 CPU and the ATMega324pa GPU. Both processors are quite good at their little jobs in their respective worlds, but getting them to talk to each other nicely has been educational indeed. I’ve spent many an hour over the past few months with this test setup on my bench:

The reason this is so challenging is that this is where everything I’ve done so far on Veronica meets in the middle. This is where I find out if my bus architecture is any good, if my ROM code is executing properly on the 6502, if my memory-map address decodes are right, if my GPU code is talking to the VRAM properly, if the VGA signals are all being generated properly, and a thousand little details I’m glossing over here. The point is that, while I tested all these systems in isolation, you find all manner of new problems when you start connecting them to each other.

To recap, my overall design for this is that the GPU acts pretty independently, and handles all the pushing of pixels. The CPU only sends small commands about what things to render in what locations. In 8-bit tech terms, this is closer to a Nintendo than an Apple //. Old game consoles (and some of the better 8-bit computers) used custom chips for generating and rendering video, since it’s difficult for a slow CPU to do and still have any time left to do anything else. The ATMega in Veronica is basically playing that role. I can’t fabricate custom silicon, but I can fake it with software on a fast microcontroller.

My initial plan for CPU/GPU communication was to use the extra memory I have at the top of my frame buffer as shared space for both processors. Since the frame buffer is only about 60k in size, I have 4k at the top that isn’t in use. I figured I would use that space for (among other things) receiving commands from the CPU for what to render.

Well, after many trials and tribulations, I ended up settling on something quite a bit simpler than sharing the upper 4k of VRAM. Getting the two processors to share that memory without disrupting the VGA signal generation proved very difficult, and more trouble than it was worth. The AVR isn’t really designed to use external memory, and it really isn’t designed to share external memory with someone else. As a result, it just doesn’t have the right set of interrupts and control signals that would be needed to do this well. Furthermore, I simply ran out of pins! Using external 16-bit parallel-access RAM eats up a great many pins, and the VGA generator chews up the rest. I needed to accomplish this communication channel with the CPU using very few control signals.

What I ended up doing was setting up a sort of “virtual register” on the ATMega. This acts as an 8-bit buffer that can receive a command from the CPU asynchronously. This register is memory-mapped into the 6502’s memory space. Processing the command in this register is done on an interrupt on the ATMega, so that the CPU won’t have to wait. However, the interrupt structure on the AVR isn’t set up quite right for this. The VGA signal generator is using a timer interrupt, but this virtual register needs to trigger an external interrupt. External interrupts on the AVR are always higher priority than internal ones, so the CPU will unavoidably be able to interrupt the VGA signal generator.

To compensate for that, the CPU has to play nice and only send commands during the vertical blank (VBL). That means it needs to know when the vertical blank is! So, I make this same “virtual register” bi-directional, and it does double-duty as a status register for the GPU. The GPU sets a bit to indicate the VBL is underway, and it’s safe to send rendering commands.

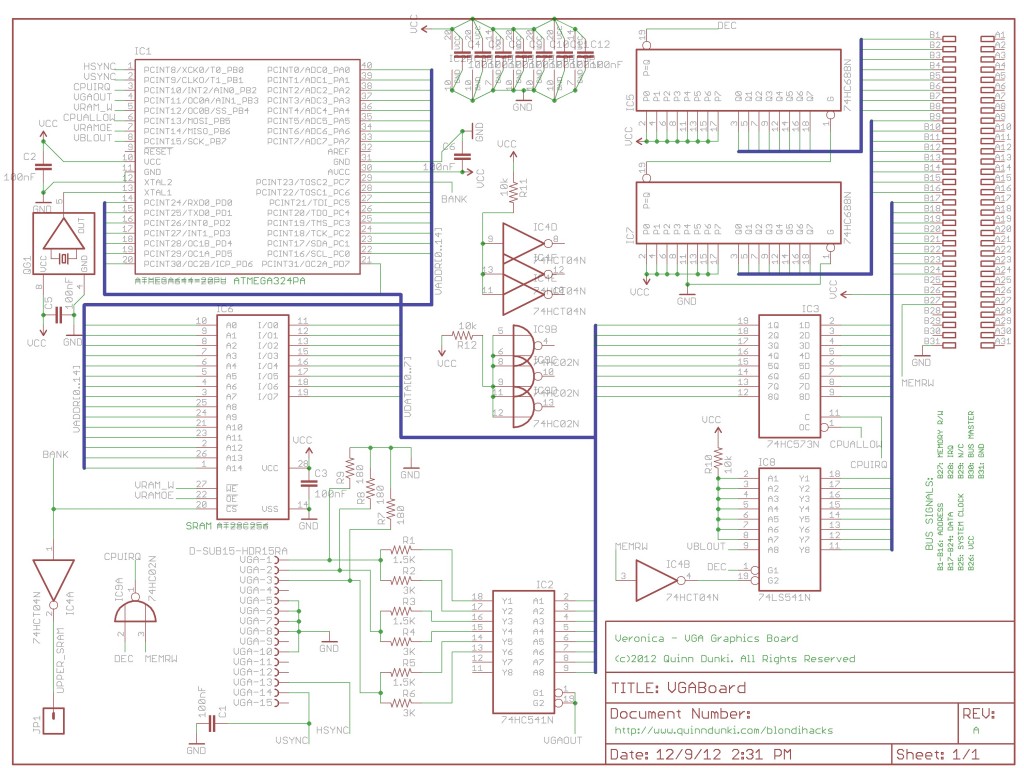

The virtual register maps to memory address $EFFF, which is an area reserved in Veronica’s memory map for communicating with peripherals and such. The VGA board has some address decode logic that detects attempts to read or write to that address, and reads or writes the virtual register in response. The address decode logic is a pair 0f 74HC688 8-bit comparators, which are great chips for decoding a single address without a ton of boolean logic. To save even more chips, both the “reading” and “writing” version of this communication register are at the same address.

The trick here is that this “virtual register” is really two different chips that are selected by the memory R/W signal from the CPU. When the CPU attempts to read, a 75HC541 buffer is activated, which drives the data bus. The inputs on the ‘541 are connected to PORTB on the ATMega, which handles all the control signals (including the VBL). When the CPU attempts to write to $EFFF, the ‘541 is switched off, and a 74HC573 8-bit latch is activated. This needs a little more explanation.

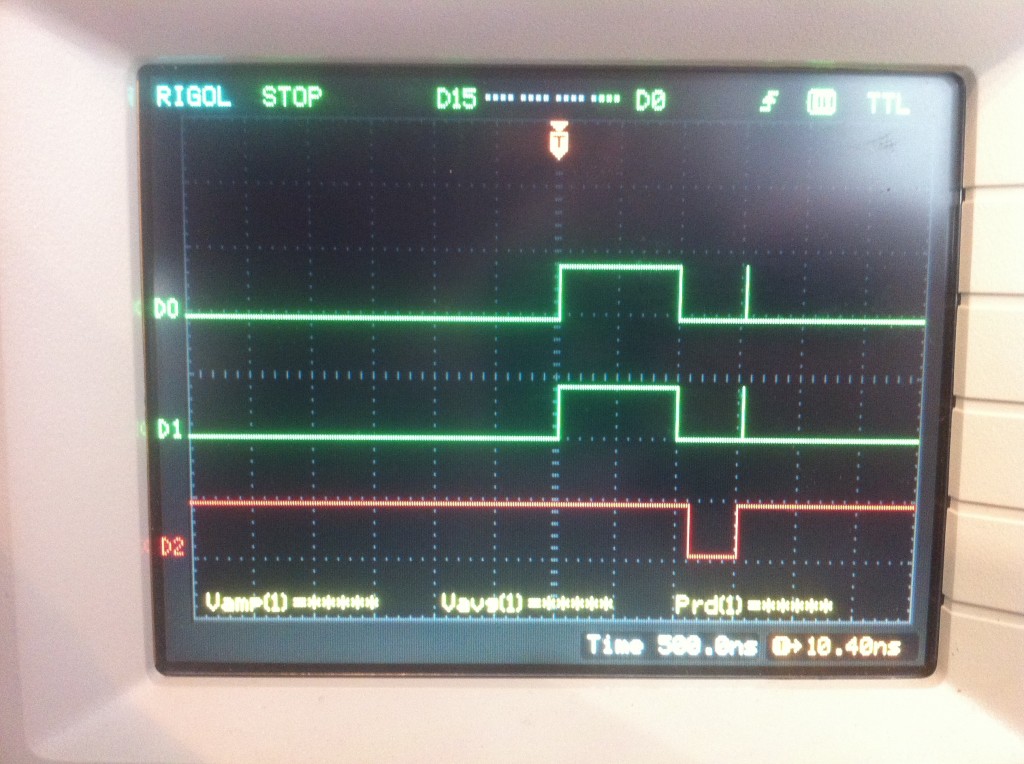

You see, when the CPU sends a rendering command, the GPU can’t process it right away. It may be busy doing other things (such as other rendering tasks). So, the virtual register interrupt handler needs to send out a signal when it’s ready and the GPU’s data bus is free for use by the CPU. There’s a small delay there, because the GPU needs to handle the interrupt and set up some states to receive the data. During that delay, the CPU will have already moved on and given up writing its command to the data bus. Here’s what that looks like on the oscilloscope:

The green pulses are the “address decoded” and “write” signals (active high) from the interface logic, indicating that the CPU is writing a command to the virtual register right the hell now. The red pulse (active low) is the GPU saying, “okay, I’ve cleared all other traffic off my data bus, go ahead and write to it”. However, see that little gap between when the green pulses end and the red one begins? By the time the GPU says “go ahead”, the CPU has already given up.

My solution is to latch the data into a bank of flip-flops, so that the GPU can get to it when it’s ready, and the CPU can keep calm and carry on. That’s the magic of the 74HC573.

So let’s cut to the chase. What does all this look like? Well, brace yourself. Here’s what I believe will be version A of Veronica’s VGA display board.

It’s easily the most complex Veronica board to date, but it’s really not as gnarly as it looks once you understand the basic workings of it (which I hopefully explained well enough above).

Here’s the prototype, finally working after many many long nights. My hands still bear the imprint of the logic probe (incidentally, one of the more underrated tools in hacking circles, in my opinion).

There’s some critical code needed to drive this logic on the GPU side. When commands come in over the system data bus and are decoded, an IRQ is sent to the AVR. That’s handled like so:

(apologies for the clunky formatting- the WordPress gist embedder is not cooperating these days)

That interrupt handler needs to be as simple and quick as possible, so as to not disrupt the GPU’s other rendering that may be executing. Note that even this short little handler is enough to disrupt the VGA sync pulses (and cause the monitor to lose signal lock), which is why it’s important that the CPU only act during the vertical blank. This handler simply copies the commands byte to a buffer register for later processing on the GPU’s main code loop.

Speaking of the GPU’s main code loop, here it is:

This is just a temporary mockup, but you can see that ultimately it will be a simple jump table to the various rendering routines provided by the GPU.

Now let’s see it in action. For that, the CPU needs to actually request a command. For a very long time, Veronica’s CPU has frankly been doing squat besides sitting there looking all “retro”. Sure, I built a fancy on-board EEPROM programmer and everything, but it’s been waiting for some way to interact with the outside world. Now, fingers crossed, we have that. Here’s some test code in 6502 assembly that I put in ROM to run at startup:

That code waits 4 seconds (roughly, assuming 1Mhz clock speed), then starts polling the GPU status register by reading from $EFFF. It first waits for the VBL to go low, then waits for it to go high again. This is to prevent attempting some rendering right at the end of the blanking. If we start rendering, and the VBL is already underway, we don’t know how much time we have. By waiting for the VBL signal to go low, then high, we ensure we’re catching the start of the blanking period. Note that we can attempt rendering at other times if we want, but it will cause visual glitches on the display, and can potentially cause the monitor to lose sync for a moment if one of the critical control pulses is interrupted. This is not so different from 1980s computers, except they were immune to losing the video signal lock because they generated their critical sync signals in hardware.

Once the CPU has found the VBL, it sends two commands by writing values to $EFFF. The first one ($04) is the command to clear the screen. The second command ($03) renders a test sprite which is an 8×8 pixel red box.

Incidentally, that code was written and assembled with this nice little online 6502 assembler that google turned up. If I was being truly purist, I’d be hand-assembling all code at this point, since it’s technically cheating to use modern tools to get the old-timey code in there. In the old days, until the computer was up enough to use it to write its own code, hand-assembly was the only way! Well, I’ve done my time (back in the day) in that regard, so I’m willing to skip that part of the retro experience now. If pressed, I could probably still rattle off the bit patterns for all the 68000’s addressing modes from memory. Good times.

So, with that code uploaded to Veronica’s main ROM, does it work? Let’s find out. In this video, I power up Veronica, and you see the usual splash screen being rendered by the GPU (that’s hardcoded, and has nothing to do with the CPU). Then I flip the switch to start the CPU, which runs the code shown above.

Doesn’t seem like much, clearing the screen and drawing a little red box by the CPU’s request. But a whole, whole, whole lot of things have to work in perfect harmony to make that happen. It’s been a good day.

Awesome! I really like reading about this project since I want do something similar. Mine will eventually become a Z80 powered computer. Since I don’t have an EPROM programmer, I used a PIC16F877 to communicate over serial with my PC and load my program into an SRAM chip which is used as ROM (in the eighties people would be jealous… (ab)using SRAM as ROM…).

For the video part I want to use an FPGA (which I got today). No “messy”, “wait for blank signal”-solution for me, thank you 😛

Unfortunately I don’t have time to finish it (college, work, etc.). 🙁

For ROM, I’m doing the same thing as you describe, actually, except I’m using a little ATTiny as the in-line programmer. The EEPROM and SRAM have the same pinouts, so you can use either/or. Check out these posts for the details:

http://blondihacks.com/?p=708

http://blondihacks.com/?p=780

http://blondihacks.com/?p=805

The only trick is that EEPROM can be very slow to write to. I coded the ATTiny to use a special burst mode supported in that particular brand of EEPROM chip. It was tricky to get it working, but worth every bit (pardon the pun) of effort because I can now upload new ROM code as fast as I can write it.

For graphics, I think an FPGA is really the better way to do it. This is fundamentally a hardware problem, and bitbanging the solution with software is neat, but not really very robust. I opted to do it this way because I didn’t want to tool up on FPGAs just yet, and I was hoping to build something that any hobbyist could replicate with a few clicks in the Jameco catalog.

As for time, well, that only gets less available as you get older, trust me. 🙂

Yeah FGPA’s are on a different level, and require more effort to get it working. But one of the biggest advantages is that you can actually offload the CPU for drawing certain primitives (if you ‘code’ it in your hardware).

I don’t know how much cycles would be spent on, let’s say, displaying animations, since the CPU has to update the frame buffer. Are there any cycles left for doing other things? That’s actually the reason why I want to try to solve it with an FPGA.

*realizes the bigger lack of time when getting older*

*dramatic scream*

NOOOOOOOOOOO!

Well, it depends on how fancy you want the end result to be. If you’re unfamiliar with the 8-bit computing era, fire up an emulator for a Commodore-64, an Apple //, or an Atari 800. You might be surprised what can be achieved at decent resolutions with only 1MHz worth of muscle to do everything. My 20MHz AVR is a monster in comparison. Even after bit-banging all the video signals, there’s still plenty left. And of course, the CPU is still completely idle!

I’m not trying to outdo a PlayStation. I just want something that can show some text and maybe play a decent game of PacMan.

Even more educational for young whippersnappers is the Atari 2600. It had no framebuffer at all. You rendered pixels by changing the color of the raster scan beam as fast as you can (again, at 1Mhz). If Veronica can be half as fun as Pitfall, I’ll be happy.

Congratulations on getting it all to work together, it’s truly inspiring. I wish I had the time and space to set my workbench again and tag along… 🙂

PS: I know it’s just test code, but I had to:

WVBLLO:

lda $efff

beq WVBLLO

lda #$ff

WVBLHI:

cmp $efff

bne WVBLHI

All that fancy optimizing and you let slide the missing # at the top of my code? 😉

It’s okay, you’re forgiven because your domain has the name of one of my favorite C-64 games of all time. 🙂

And while we’re on the subject of errors, a comment in my AVR code is wrong. The INT2 interrupt is configured to be rising edge, not falling edge. I’ll fix both of those when I can get back to my computer. Whoops!

Well I figure that $00ff initializes to either $00 or $ff, so it’ll work find anyway 🙂

It’s like the world’s worst random number generator. 🙂

I suppose the next step now is implement lots and lots of commands like you did that “draw a square” one, right? (Like generic reads/writes to the VRAM?) Do you plan to have sprites like the TMS9918? Speaking of which, have you heard of the F18A? http://codehackcreate.com/archives/30

Exactly right. In fact, the red square is actually a “sprite”, in the sense that is a bitmap being copied into VRAM from a storage area. I’ll be extending that into a font system, then later into sprites.

That F18A is really cool- thanks for sharing! If I’d known about that, I might not have embarked on this course. :). I did a bit of looking around for retro-like video chips, but couldn’t find any. Some people are building home brew computers with vintage chips like the awesome Yamaha V9990, but those are hard to find and expensive. I wanted to make something that others can recreate with current off-the-shelf parts if possible.

We’ll see how this AVR approach pans out. I’m leaving the door open to redoing it all with an FPGA, similar to that F18A project.

You know, somehow using an FPGA seems like cheating when the support chip is 1000x+ more powerful than the real CPU, (and in fact could implement the CPU internally). So many pins, so many gates! (WWWD – What Would Woz Do ?;-)

However, since you’ve already done the down-to-the wire chip build, re-implementing something in FPGA would be educational for all involved. (I’m still in awe of your breadboarding skillz). It would actually be great to see your approach.

Congrats on the VGA build and thanks for sharing!

Speaking of alternate paths, would it be viable to use DPDRAMs like this one, hence allowing full (bank-switched) framebuffer access to the CPU? http://www.ebay.com/itm/271131439498

It seems pretty feasible to me. Dual port RAM is very cool, and is something I didn’t know about when I set off on this project so many months ago. Its definitely something I would have considered. I would probably shy away from DRAM, though. It requires refreshing, which is an extra challenge I’m not really interested in taking on.

Hrm. There’s DPSRAM, but they’re considerably more expensive. Five bucks for one KB. Oy vey. http://www.ebay.com/itm/400303838155

Well, for thirty bucks you’ll have a screen as good as that of the TRS-80 CoCo! But with one page only! Gakk.

Well using external dual port ram is an overkill having a SoC in the project, for sure. This would be useful if you were using FPGAs or CPLDs, because you would have an easy controller and plenty of memory.

The thing is that for VGA output you don’t need refreshing the cells, cause reading a line causes the line to refresh. And H-Blank periods are perfect for line open/close needed in DRAMs.

Anyway a microprocessor is way too slow to drive a RAM. I dunno if a low cost CPLD could do, probably yes because of the simple logic needed to implement everything.

Somewhere in my junk box I have a sample TMS9918, made by WD. If I could find the required dram chips and the weird frequency rock I’d like to build a video interface based on it. I do have some three voltage 16K dram chips, which are actually 32k dram chips in 18 pin packages….each has TWO 16k dram dies on it in a dual well package with twin RAS and CAS pins. They are proabably a tad better idea than stacking two ram chips to get twice as much. I was thinking of stacking two of them to get 64k for an old Z80 system back in the day…..

Looks like a lash up similar to the TI-99/4 and TI-99/4A. Those computers had a powerful yet hobbled 9900 16 bit CPU with a whopping 256 BYTES of RAM directly usable by the CPU.

The 16K of RAM was on an 8 bit bus, all used as VRAM for the 9918 or 9918A Video Display Processor. The fancy CPU most of the time was simply being a director for code processed by the VDP. Data had to be buffered and held to go from 16 to 8 bits and back since the CPU and its 256 bytes of RAM were the only 16 bit things in the box.

It took adding a 32K RAM expansion and assembly language to unleash the full power of the Texas Instruments Home Computer.

There’s an FPGA version of the TMS9918A called the F18A. It’s a drop in replacement for that VDP as well as the PAL versions. It outputs to a VGA connector. It’s not simply a clone of the original, it fixes some of the issues and bugs – removes the 4 sprites on a line “feature”. There are plans to add features like a true bitmap mode with every pixel individually addressable for color instead of having to work with 8×1 pixel, 2 color blocks.

The F18A works in MSX1 computers, the Colecovision game console and anything else that has a 9918A or pin-compatible VDP.

I don’t know if you have looked into other design software or not, but there is a program for Windows that is a combination of QUCS and KIKAD. It is called QUCSStudio and can be ran on Linux using Wine. Kikad works just like Eagle, except it has none of the restrictions of the free version of Eagle. The QUCS portion is a circuit simulator. Under this version, though, you get to create your own C++ defined devices. You would have to monkey with it a bit, but you could potentially simulate your project with it for the most part.

Also, Microchip has the PIC32 microcontroller. If you get the right one, it has what the call USB on the go. This means you can host USB devices with it. It also supports ethernet. It is a 32 bit processor and I believe you can set it up for external memory.

I must say I am impressed. The R6502 was the workhorse of the age, but was treated to the steamroller by the Z80 and the I8086 and its friends. All of your work is definitely amazing. In fact I taught myself R6502 assembler to support working in BASIC on the Apple.

Thanks! I did the same thing back in the early 1980s- taught myself assembly when AppleSoft Basic got too frustratingly slow. The 6502 is a great architecture to learn on, due to the limited registers and clever addressing modes. If I had learned on the register-full 68000 that I spent most of my later assembly years on, I might have picked up some bad habits. 🙂 The 68k chips are still among my favorites, though. The ‘040 in particular had an awesome quad-word memory move instruction that made for some wicked fast sprite routines on early Sparcs and Macs.

Wow, this is a really cool project! Now we need to see a few games developed on your new system 😉

I forgot to ask, “Is the backplane I see to the left of everything one you built yourself, or one that you, ah, re-purposed? (It’s the one wearing Ronnie’s guts. (A nickname for any woman named Veronica.)) I’m still impressed as it happens.

Yep, that’s one of the earlier Ronnie Bits that I made. Here’s the write up on that:

http://blondihacks.com/?p=732

You ran out of pins? Have you thought about using shift registers (serial in, parallel out)? And one pin be many!

I’m a big fan of shift registers, normally. That is, for example, how I got an ATTiny13 to drive 4 hexadecimal displays in my HexOut project (http://blondihacks.com/?p=654). However, the problem with shift registers is they cost you 8 clocks for every byte. In a timing-critical application such as video generation or multi-CPU synchronization, that amount of delay isn’t an option.

But you got 20Mhz and AVR can even be overclocked! That should suffice.

@Veronica: Also, a compromise: http://www.nxp.com/documents/data_sheet/74HC_HCT4015_CNV.pdf

At 20Mhz, I’m actually a little short of what’s needed to generate VGA, so I don’t have any cycles to spare. I’m cutting some corners already just to make it work at 20Mhz. Overclocking might work, but getting the timing right on the sync signals is very difficult, and I’d have to retime everything if I changed the clock speed. I appreciate your suggestions, though- I’m sure there are ways this system could be improved.

TBH, imho the vga generation should not be handled by a microcontroller at all. Maybe a large CPLD or a small FPGA would fit way better.

Thanks for your input. There are many ways to solve this problem. If you’re interested in the reasons I’ve gone with this approach, feel free to look back through the older articles on this project.

I have a possible optimization for your GPU code. Is it necessary to push your registers onto the stack in the interrupt routine? I ask because I just took a class that used the venerable HC11 (speaking of legendary processors), and the CPU automatically pushed the frame before the ISR runs and pops it after. I haven’t had a chance to learn assembly for any of Atmel’s chips, but I would be a little surprised if they didn’t have that programmer friendly feature.

Also, what is your educational background? You make these amazing games but then this unholy hacking skill. Did you start out as a double-E?

Actually, no, I’m guessing you’re not an Electrical Engineer because none of my classmates or professors do work this clean.

Right now I’m making a board to interface classic game console controllers to a Raspberry Pi, including writing a Linux kernel driver (bleaaaaagh). I’m taking much inspiration from your approach to hardware design and your lessons on board etching.

So, thanks for publishing such nice blog-torials.

I’m certainly no expert on AVR assembly, but I believe I’m doing close to the minimum register preservation in those handlers. One big trick I’m using is that many of the registers are “reserved”. Almost all of the ones used by the signal generator, for example, are not allowed to be used anywhere else. That way I don’t have to save/restore them. That’s not to say this code is optimal- it certainly isn’t. It’s still very much in a “make it work” state at the moment.

I took Computer Science in school, and my background is primarily in software. I find the parallels between software engineering and electrical engineering very interesting- a lot of the same methodologies of design, implementation, and debugging apply. The main difference is that the debugging toys are a lot more expensive on the hardware side. 🙂

Thanks for your kind words! Your Pi project sounds really cool. I hope you document it somewhere! I’d love to see a homebrew solution to connecting 8-bit computer joysticks (like my beloved Mach-1 for my Apple //) to modern computers for use in emulators. Playing those old games just isn’t the same without the proper old joysticks.

Is the Mach-1 one of those joysticks that was just a potentiometer that controlled the firing time of a 555 in the computer? I think the Apple // used that method, like a million others. There was a nice write-up on Hackaday about those.

Considering the trouble the R-Pi has with current SNES emulators, after I’m done with the gamepad portion, adding support for old joysticks like that would be interesting. I’d like to make it into a universal console emulator; considering the performance problems maybe it could be an Atari and other 80’s-era computer emulator, complete with joystick.

I think I grew up in the wrong decade sometimes.

Total agreement on the debugging toys, by the way. With the university closed over the break, I have to make due with a multi-meter and intuition, which probably explains me picking a software-heavy project.

Yep, you got it. The Apple joysticks were analog, which was quite unique at the time (and frankly, made for much better games in my opinion). For example, driving the cars in Autoduel was much better on the Apple // than, say, the Commodore 64 (who’s joysticks were so touchy that the term “commie-twitch” was coined to describe the motion needed to make small adjustments).

The Apple // had a 556 on the motherboard, one 555 for each axis of the joystick. It was originally intended for game paddles (one pot each), but aftermarket joysticks came along later. The big disadvantage was that a single joystick used up both timers (one for each axis), so you couldn’t easily support multiple joysticks. This meant multi-player games usually sentenced one player to the keyboard controls. The C-64 and Atari computers were much better for multiplayer games, for this reason. On Archon, for example, the player on keyboard is going to get their butt kicked every time, because the joystick controls are always better. The running joke was that Apple // users didn’t need two joysticks, because they had no friends. 🙂

If you’re interested in this stuff, all the early Apple // computers’ schematics are available online (they were printed in the back of the owner’s manual in those days). Woz documented them well, and they’re quite readable (as well as educational, and obscenely clever in many cases). Building an Apple // on a breadboard is entirely feasible, if you could get the ROM code and some of the more obscure chips that aren’t made anymore.

Can I just say I’ve never had a good comment conversation until now?

I’m not really interested in re-creating the actual old hardware. There is already a kit that is a clone of the original Apple pc, or maybe the Apple //e, not sure, with an SD card instead of floppies. I think it’s based on an FPGA.

Right now I try to make sure that the projects I do for fun still help me build profitable skills for when I do enter the job market. It looks the embedded market is moving strongly towards ARM-based Linux solutions, so I figure writing drivers and developing custom hardware for a fairly representative system like the R-Pi should be preparatory for entering that kind of work.

I’m also very interested in game development, so the final result of an R-Pi with gamepad input would be a good test-bed for my game experiments. I’ve always preferred console style play to keyboard and mouse.

As a developer, are you interested in developing for the Ouya? I’m curious what a successful game developer like yourself thinks of that platform. Having hardware targeted at the independents seem like a huge step forward.

Sorry for clogging up your comments with a conversation like this, I don’t want to get too off-topic.

Honestly, I haven’t been very interested in the Ouya. I appreciate what they’re trying to do, but in my opinion, it’s not the right answer. It’s true that game development is in desperate need of a stronger indie segment, however. Similar to Hollywood, without indies to innovate and lead the way, the big studios will just churn out the same crap ad nauseum. How many more first-person-shooters and GTA clones does the world really need? I think the PC/Mac/Linux space is probably the best place for indies, though. The barrier to entry is low, the potential user base is large, and having the tools on the same platform as will be running the game is a big advantage for small operations. HTML5 is one to watch, also. If it lives up to the promise, it could provide a great platform for indies.

To expand on this a bit, the reason I think Ouya is off-track is that it’s on an evolutionary dead-end. The notion of dedicated gaming hardware is going away, IMHO. We’re converging on mobile devices at a furious rate, and I wouldn’t bank on big home consoles existing in 10 years. Once a tablet has comparable horsepower to an Xbox 360 (and they’re catching up fast), why wouldn’t I stream my game to the TV from that instead of needing a special box? The best way to reach people with a game is to put it on a device they already have.

I want to say you’re wrong about consoles going away, but that’s just my own wishful thinking. But then, are console game sales decreasing? With huge blockbusters like the annual Modern Warfare games, maybe that will keep the consoles alive and allow more diverse games to exist. Maybe that’s exactly the problem.

I find myself playing a game on my iPod so much more often than my consoles, I know you must be right. But then we won’t have joysticks and buttons! If everything is touchscreen, there goes that direct link between your brain and the game. I’m sure I sound like a broken record, but joysticks, man!

I’ve been playing the re-release of GTA: Vice City on iPad. Having played the original on the PS2, I have these weird phantom twitches, my hands trying to bang out the cheat codes that have been embedded in my neuro-musculature. Of course, that doesn’t work with onscreen touch controls, and that tactile feedback and experience isn’t there.

Anyway, I’m super excited about the increasing compactness and increasing power of our computers (especially as a EE student who might work on their designs one day), and we seem to be moving towards more intuitive interfaces with those devices. I’m just afraid we’ll lose something along the way that makes them more personal.

But then we can always lash together a classic CPU and support components and build our own experience.

By the way, I hope a twenty-something waxing nostalgic doesn’t sound a little pretentious.

Very nice project! This kind of thing really appeals to me. I got a EE, but then career path took me far away from that! And even today most of my hacking is in perl for work… But I have been feeling the itch to get back to the hardware, so you’re project really caught my attention !

Best of luck and keep the updates coming 🙂

gz to you for a really mega (couldnt resist) project ! I dont often meet a blue meany with flair and style with their retrograding projects. I cant wait to read the next installment.

have u considered or seen the contiki project?

I think it would be the icing on the cake for your system, multitasking, multithreadeed GUI OS thats tcpip capable -ports to c64 a][ Atari 8 bit 6502 Nintendo and way more.

Thanks! Yes, I have looked at contiki a little bit, and it’s really cool. It may have a future on Veronica. 🙂

Hey,

Why don’t you just disable external interrupts and reenable them at VBL? This way it would be immune to VGA desync due to CPU commands being sent during the rendering.

Excellent question, David! The main reason I didn’t do this is because it wouldn’t really solve the problem. The CPU still has to play nice by not occupying too much of the GPU’s time towards the end of the blanking period, lest it step on the sync pulses. Disabling external interrupts would prevent new commands from being issued, which would help somewhat, but at a cost. Switching interrupts on and off takes quite a few cycles, which the GPU doesn’t really have to spare at that critical moment in the sync pulse loop. Since the CPU has to worry about what the VBL is doing anyway, I opted to keep the GPU code as efficient as possible and save the cycles for more rendering time.

Oh I see… You’re doing some high-level hacking here, it’s not easy to achieve this without problems. VGA signals are tricky, specially running with low frequency CPUs.

So just one more question, why are you using interrupts. I assume the CPU has a constant IPC, so every instruction takes the same number of cycles and therefore time to complete (maybe branches are the exception though). Why don’t you just make a big loop to render and a VBL loop to read and execute commands (that could be a little tricky because it would have a variable amount of executed instructions and could break the timing)? I’m just thinking the CPU as a FPGA or some hard-logic.

Any way, I wish you luck. You project is amazing!

Well, to be honest, using a timer interrupt for the sync pulse generation is simply the first thing I tried and it worked so I’ve stuck with it. It’s entirely possible a simple procedural loop as you describe could work! It’s a realtime application, so from the very beginning I was in a realtime mindset, which generally means interrupts. 🙂

Yeah you are right, but you usually want to use interrupts whenever you do something meaningful meanwhile or want to be aware of multiple things happening (multiple interrupts). But a VGA interface it’s just outputting bits as fast as it can, so it’s faster to load a pixel and output it and repeat it as fast as possible. You could try an alternative firmware with even more resolution.

I implemented a VGA interface on a FPGA for a project (which was a MIPS-like processor). It was a row/col counter which read data from a RAM, looked up into a ROM and outputted a pixel to a DAC. The processor could access the RAM at any time (it was dual port) but an arbiter could be added to only allow the access during VBL (and therefore just using a regular 1 port RAM).

BTW, which command are you planing to implement on the GPU? Something like put pixel, draw line, fill rect …?

Yup, you’re not wrong. 🙂 The firmware is still in the very early stages, so I’ll probably try a few more things. As for the rendering API, for now I’m planning text and sprites. Beyond that, well, I’ll have to see how it goes.

This was easier to do with the Parallax Propeller on the Prop6502. Since I had 8 cores to work with I could run the video signal and the bus interface independently. Just an observation, not a criticism of your alternate approach. Really enjoying seeing your project move along!

That sounds like an interesting project, Dennis- do you have a link?

Quinn,

If you want 7 more I/O lines on your AVR, you can multiplex the hi and low address busses. I have a clone of the Lucid Science VGA generator where I use a latch for the high address. During H blanking, I set the hi address byte for the row, and then just count out low addresses when rastering. When writing, I try to do as many consecutive writes as possible, to avoid relatching the high address. One thing I never understood with the Atmel AVRs that actually have a real external memory bus, is why they latch the low address. This forces the AVR to latch at every byte read, making the external ram slower than it has to be. Sometime soon I will have a dedicated counter IC count out the rows, freeing up the cpu. Of course, I can’t write to the ram while the pixels are being spit out, but at least the cpu could do something else. Every cycle counts.

-Mark

Quote: “If pressed, I could probably still rattle off the bit patterns for all the 68000′s addressing modes from memory. Good times.”

Yep..That’s when I knew your blog had me. The article with “lets change it to an infinite loop” and you showed the JMP XXYY instruction and before reading further my brain kicked out 45 YY XX 🙂

I disassembled all the ROM’s in my C64 (including the floppy ones) back in the day and had to get good at identifying proper opcode sequences (since figuring out from a dump what was data vs code was a challenge). So at least 4C and A9 have always stuck.

Hello, and first thank you for your time spent on that blog. Enjoyable reading and interesting content all around.

“You should have seen some of the design monstrosities that didn’t make the cut.”

Probably being a bit late, but I wonder why nobody asked you to publish that breadboard freaks gallery – From reading around here I imagine you take pictures even of the failed attempts.

Thanks- I’m glad you like it!

In this case, I was actually referring to drawing-board designs for possible GPU interfaces, so those “monstrosities” only existed on paper. I probably have the notes around here somewhere, but I never built any of them.